RAG, REALM, RETRO & Beyond: The Evolution of Retrieval-Augmented Models

Breaking Down the Basics: A Simple Guide to RAG, REALM, and RETRO AI Models.

Language models have really changed recently. Now, they use extra information from external sources to become smarter. This makes models like RAG, REALM, and RETRO better than the usual large language models we used before. In this post, we'll look at how RAG, REALM, and RETRO work, what's good and bad about them, how they're different from each other, and how they're better than traditional LLMs. We'll also think about what might come next in this area.

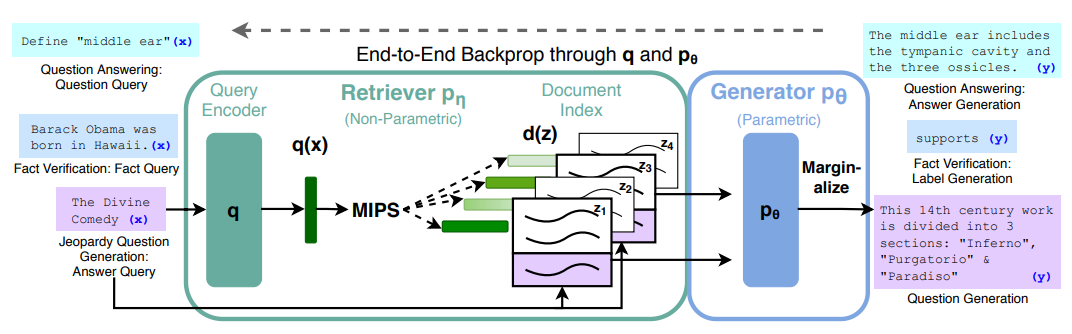

RAG (Retrieval-Augmented Generation):

RAG is a special kind of language model. It combines a language model with a system that finds information from a large group of documents. This helps RAG give better and more accurate answers, especially when you need specific facts. But, RAG's answers depend on how good its information sources are. Sometimes, it struggles to mix the information it finds into its answers smoothly.

RAG Mechanism:

Understanding the Retrieval-Augmented Generation (RAG) model requires diving into its unique mechanism that combines retrieval and generation based on conditional probability. At its core, RAG operates by calculating the probability of generating an appropriate answer A given a specific query Q and a set of retrieved documents D1, D2, …, Dn. This process is represented mathematically as:

This equation signifies the model’s effort to find the likelihood of the answer A when we have a query Q and relevant documents D1, D2, …, Dn. The RAG model achieves this in two primary steps:

Document Retrieval: For a given query Q, the model first retrieves a set of documents that are likely to contain relevant information. This step involves estimating:

\(p_n(D_1, D_2, ..., D_n|q)\)which is the probability of each document being relevant to the query.

Response Generation: Following retrieval, the model uses both the query and the gathered documents to generate an answer (A). This crucial step involves approximating

\(p_\theta(A|q,D_1,D_2, ..., D_n) \)the probability of generating the answer considering both the query and the retrieved documents. So the overall expression of the RAG process:

Through this sophisticated approach, the RAG model effectively maximizes the chances of producing the most relevant and accurate answer A based on the query Q and the information extracted from the selected documents D1, D2, …, Dn. This mechanism showcases the innovative blend of retrieval and generation, pushing the boundaries of what language models can achieve.

A Simple Way to Use RAG with Hugging Face:

For those looking to get started with the RAG model, a straightforward approach is to use the implementation provided by Hugging Face's Transformers library. This popular library simplifies the process, allowing you to leverage RAG's capabilities with just a few lines of code.

Hugging Face has pre-trained the RAG model on a vast dataset, making it readily available for various tasks, especially question-answering. The model combines a powerful retriever and a language generation model, offering high-quality responses by fetching relevant information from a large dataset.

To use RAG from Hugging Face, you first need to install the Transformers and Torch libraries. Once installed, you can initialize the RAG components – the tokenizer, retriever, and the model itself – directly from Hugging Face's model repository.

The process is as follows:

Initialize the Tokenizer: This component converts text inputs into a format that the model can understand.

Set Up the Retriever: The retriever fetches relevant documents based on the input query.

Load the RAG Model: The model uses the retrieved documents and the input query to generate an answer.

Here's a basic example of how you can use RAG for a simple question:

# Import necessary classes

from transformers import RagTokenizer, RagTokenForGeneration, RagRetriever

import torch

# Function to generate an answer to a question

def generate_answer(question):

# Initialize tokenizer, retriever, and model

tokenizer = RagTokenizer.from_pretrained("facebook/rag-token-nq")

retriever = RagRetriever.from_pretrained("facebook/rag-token-nq", index_name="exact", use_dummy_dataset=True)

model = RagTokenForGeneration.from_pretrained("facebook/rag-token-nq", retriever=retriever)

# Process the question

inputs = tokenizer(question, return_tensors="pt")

# Generate the answer

with torch.no_grad():

generated_ids = model.generate(input_ids=inputs["input_ids"])

# Decode and return the answer

return tokenizer.decode(generated_ids[0], skip_special_tokens=True)

# Example usage

question = "What is the capital of Saudi Arabia?"

print(generate_answer(question))This code demonstrates how easily you can implement RAG for question-answering tasks. When you run this with a question like "What is the capital of Saudi Arabia?", the model should return "Riyadh" as the answer.

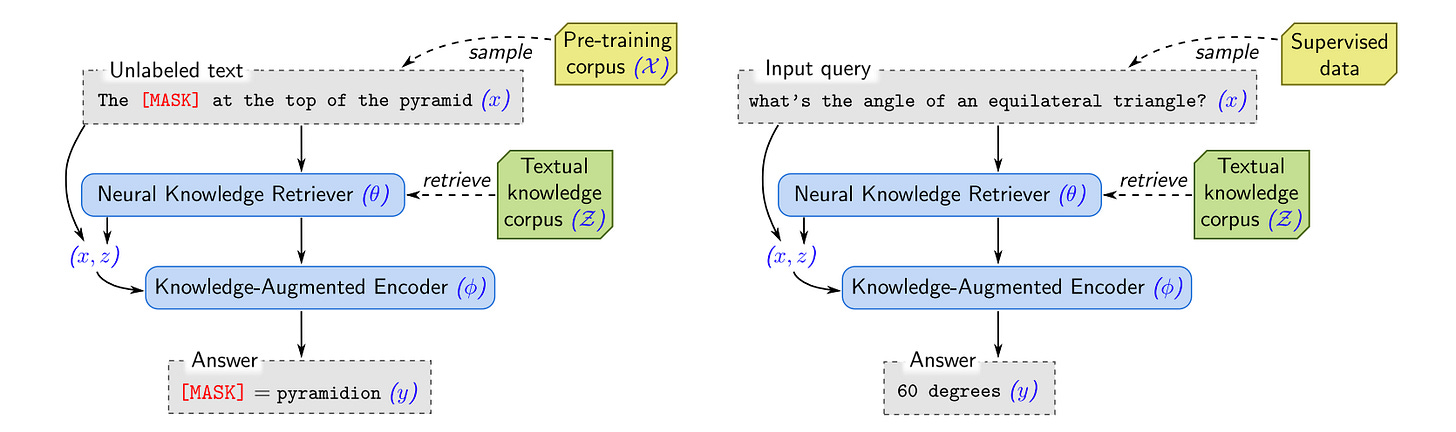

REALM (Retrieval-Augmented Language Model):

REALM is similar to RAG but works a bit differently. It learns to find useful documents and make answers at the same time. This makes REALM really good at giving relevant and correct answers. The downside is that it can be complex and needs a lot of computing power. Like RAG, it also depends on having good sources of information.

REALM Mechanism:

REALM, much like RAG, carries out its retrieval and generation processes in a sequential manner:

Retrieval Phase: In the first phase, REALM identifies relevant documents or information snippets that can assist in answering a query. This process is rooted in the concept of information retrieval, where the model is trained to search a vast database of texts to find the most pertinent pieces of information relative to the input query.

Language Modeling Phase: Once relevant documents are retrieved, REALM uses this information, combined with the original query, to generate a response. This step involves a language model that synthesizes information from both the query and the retrieved texts to construct an answer.

The retrieval phase can be represented in a probabilistic framework as follows:

This equation calculates the probability of a document D being relevant given a query Q. REALM optimizes this retrieval probability to ensure that only the most pertinent documents are considered in the next phase.

The language modeling phase, which follows the retrieval, can be represented as:

Here, A is the generated answer, Q is the input query, and D represents the retrieved documents. This equation signifies how the model calculates the probability of generating a specific answer based on both the query and the retrieved information.

Together, these two phases enable REALM to not only understand and process the input query but also to enhance its responses by integrating external, contextually relevant information. This makes REALM particularly effective in scenarios where an in-depth understanding and up-to-date information are crucial for generating accurate responses.

REALM and RAG differ in their process integration: REALM uses a two-step approach, first retrieving documents with P(D∣Q) and then generating an answer with P(A∣Q,D), while RAG combines these steps into one P(A∣Q,D1,D2,…,Dn), blending retrieval and generation more directly.

Using REALM in a Simple Way with Hugging Face:

Incorporating the REALM into your projects can be straightforward, especially when utilizing resources from Hugging Face's Transformers library. Hugging Face simplifies the process of applying advanced models like REALM, making it accessible even to those new to the field of AI.

REALM stands out for its ability to retrieve relevant information as part of its learning process, enhancing the quality of its responses. This is a significant step up from traditional language models, as it allows REALM to provide more accurate and context-aware answers.

To get started with REALM using Hugging Face, follow these basic steps:

Install the Necessary Libraries: Make sure you have Transformers and Torch installed in your environment. You can install them using pip if you haven't already.

Initialize the Components: Similar to using RAG, you will need to initialize the tokenizer and the model. Hugging Face provides pre-trained versions that you can use directly.

Load the REALM Model: Hugging Face's model repository includes versions of REALM that are pre-trained and ready to use. This saves you the time and effort of training the model from scratch.

Here’s a simple code snippet demonstrating how to use REALM for a basic task:

# Import the required classes

from transformers import RealmTokenizer, RealmForOpenQA

# Initialize the tokenizer and model

tokenizer = RealmTokenizer.from_pretrained("google/realm-cc-news-pretrained-bert")

model = RealmForOpenQA.from_pretrained("google/realm-cc-news-pretrained-bert")

# Your query

query = "What is the tallest mountain in the world?"

# Tokenize and process the query

inputs = tokenizer(query, return_tensors="pt")

output = model(inputs)

# Process and display the result

answer = tokenizer.decode(output, skip_special_tokens=True)

print(f"Answer: {answer}")This example is a basic illustration of using REALM for question answering. When you input a query, the model retrieves information and generates an answer. Keep in mind that REALM's performance is highly dependent on the quality of the retrieval and the relevance of the pre-trained model to your task.

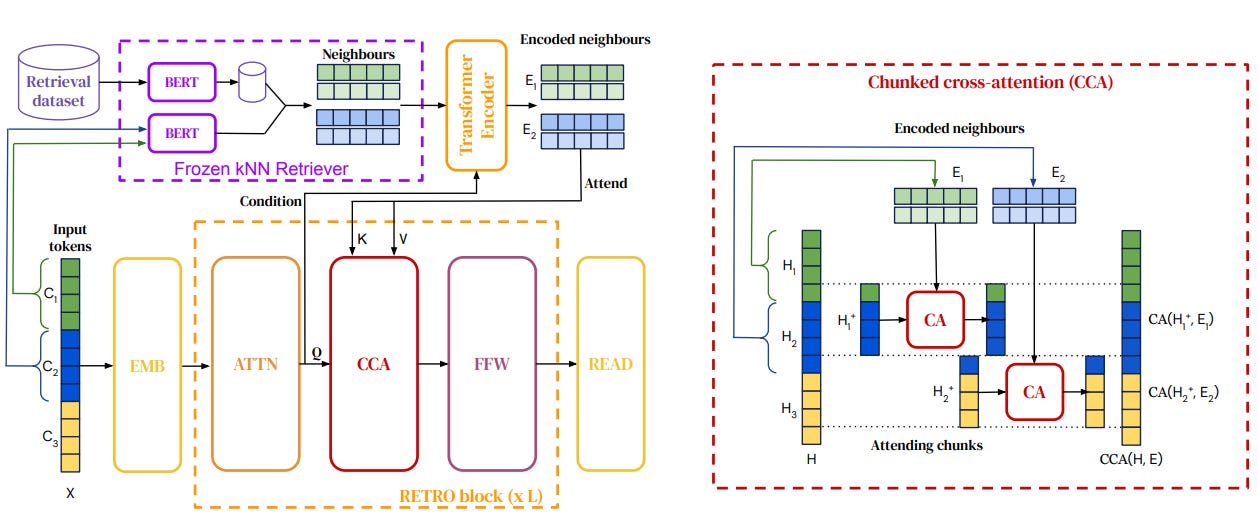

RETRO (Retrieval-Enhanced Transformer):

RETRO also uses a language model and a system to find information. But it tries to be efficient, meaning it works well without using too much computing power. RETRO is great for giving detailed answers to complicated questions. The trade-off is that it sometimes doesn't go as deep into the information as it could because it wants to stay efficient.

RETRO Mechanism:

Unlike models that solely rely on pre-trained knowledge or integrate retrieval in a multi-step process, RETRO embeds retrieval into the core of its language generation mechanism.

At its core, RETRO operates through a mechanism that involves two key components:

Retrieval Component: This part of RETRO is responsible for scanning through a vast database of text segments to find pieces of information relevant to a given query. The retrieval process is designed to be highly efficient, focusing on extracting the most pertinent information with minimal computational overhead.

Language Generation Component: Following the retrieval of relevant text segments, the language model component of RETRO takes over. It uses the retrieved information along with the original query to construct a coherent and contextually appropriate response.

The operational equation for RETRO can be represented as follows:

In this equation, A represents the answer generated by the model, Q is the input query, and R1,R2,…,Rn are the retrieved text segments relevant to the query. This formulation underscores RETRO's focus on efficiently leveraging external information (the retrieved segments) in concert with the query to produce a relevant response.

RETRO’s mechanism is distinct in its emphasis on the efficiency of retrieval and the integration of this retrieval directly into the response generation process. By doing so, RETRO aims to deliver highly contextual and accurate answers, even for complex queries, while maintaining computational efficiency. This balance makes RETRO a unique and valuable addition to the landscape of advanced language models.

Effortlessly Implementing RETRO with Hugging Face

For those interested in experimenting with the RETRO model, a convenient and effective approach is to utilize the resources offered by Hugging Face's Transformers library.

To start using RETRO with Hugging Face, here are some basic steps:

Installation: Ensure that the Transformers and Torch libraries are installed in your Python environment. You can easily install these using pip.

Initialization: As with other models, you will need to initialize the necessary components – the tokenizer and the RETRO model itself. Hugging Face provides pre-trained models that are ready for immediate use.

Load the RETRO Model: Accessing Hugging Face's model repository, you can load a version of RETRO that has been pre-trained on a wide range of data. This pre-training makes the model robust and versatile for various applications.

Here's a simple example to illustrate basic usage:

# Import necessary classes

from transformers import RetroTokenizer, RetroForCausalLM

# Initialize the tokenizer and model

tokenizer = RetroTokenizer.from_pretrained("google/retro-large")

model = RetroForCausalLM.from_pretrained("google/retro-large")

# Example query

query = "What are the benefits of renewable energy?"

# Tokenize and process the query

inputs = tokenizer(query, return_tensors="pt")

output = model.generate(inputs.input_ids)

# Decode and display the response

response = tokenizer.decode(output[0], skip_special_tokens=True)

print(f"Response: {response}")

Comparison and Future Directions:

RAG, REALM, and RETRO all try to improve language models by using outside information. They do it in different ways: RAG adds extra info to what it already knows, REALM learns to find info and answer questions simultaneously, and RETRO focuses on being efficient. In the future, we might see models that can use the latest info, utilizing semantic search methodologies to understand context better.

RAG, REALM, and RETRO are important steps in making language models that understand and use information better. Each one has its own strengths. They show us how AI can use outside information to improve. Later in another post, I will write about the coding part (which is easy, since it will use HuggingFace libraries)